iTEP Receives Official Recognition from Costa Rica’s Ministry of Education

We are thrilled to announce that the iTEP Academic Plus and iTEP Slate Plus tests have received official approval from the Ministry of Public Education of Costa Rica (MEP). This pivotal decision, formalized on May 22, 2025, through an official circular issued by the Ministry, lists both iTEP exams among the recognized language proficiency assessments. […]

Building Bridges, Not Barriers: Supporting English Language Learners Worldwide

Dear Valued iTEP Community, Recent Trump administration actions—including halting new student visa interviews, increasing scrutiny on international applicants, and moving to defund federal English learner programs—threaten the foundation of international education and workforce development. These policies create uncertainty for students, disrupt institutional planning, and risk undermining America’s global competitiveness by discouraging talented individuals from contributing […]

iTEP inFocus – May 2025 Newsletter

A Message from Our CEO Dear Valued iTEP Community, Welcome to the first edition of the iTEP International quarterly newsletter! Since our founding in 2002, iTEP has been dedicated to providing fast, reliable, and innovative English language assessments that serve thousands of schools, universities, and businesses worldwide. Our mission is to empower non-native English speakers […]

iTEP and Grepp Partner to Revolutionize Workforce Assessment in Korea and Worldwide

This collaboration brings together Grepp’s expertise in IT skill assessment and iTEP’s renowned English language proficiency testing, offering comprehensive workforce and candidate evaluation solutions to clients across South Korea and worldwide.

iTEP and American Language Academy (ALA) Join Forces in Africa

iTEP announces strategic partnership aimed at bringing affordable, on-demand English language testing to students, schools and companies in Africa.

Innovative Teaching Methods in English Language Programs

English language education is constantly evolving, and innovative teaching methods are at the forefront of this transformation. Learn the latest strategies.

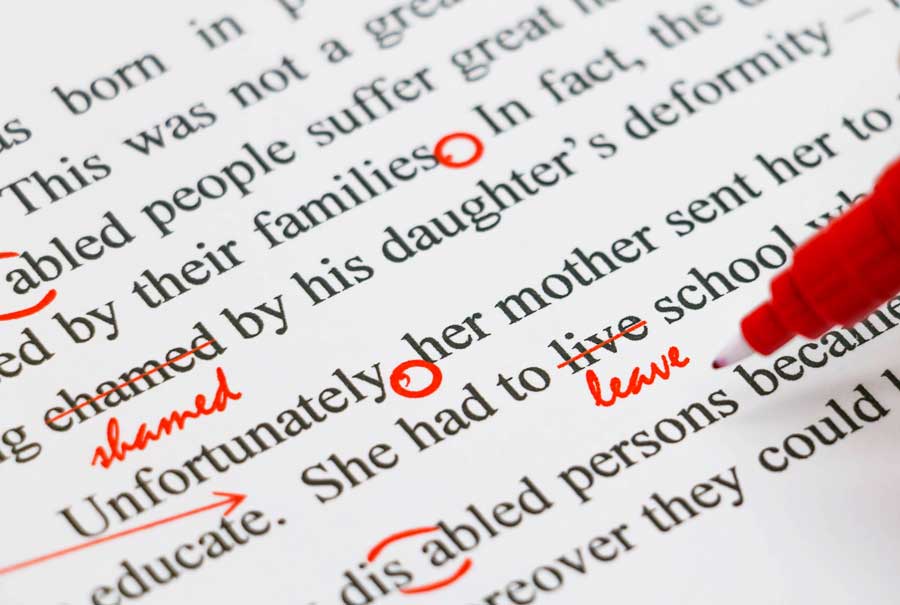

Conquering Common Grammar Mistakes by English Learners

Learning English can be tough, especially when it comes to grammar. But don’t worry! With practice and some helpful tips, you can fix common grammar mistakes.

Best Practices for Conducting English-Proficient HR Interviews

Discover straightforward guidelines to help Human Resources professionals conduct effective English proficiency interviews.

The ROI of Investing in Corporate English Language Training

In the business world, communication is the cornerstone of success, and English serves as the common ground. Explore the ROI of employee language training.

5 Engaging English Learning Activities That Make Learning Fun

Learning English can be an adventure, and incorporating fun activities into the process can turn each challenge into a playful experience. Here are five English activities that ESL (English as a Second Language), ELL (English Language Learner), and IEP (Intensive English Program) educators can introduce to make English learning enjoyable for students. 1. Crafting Comics […]