A Brief History of English Assessment

By iTEP Chairman Perry Akins

The same year TOEFL was introduced, I entered the field of international education. It was 1964. Before that, most colleges and universities had no English language admission requirement. You showed up and prayed.

TOEFL was a godsend???finally, an objective way to know about applicants’ English skills. At this point, the test was paper-based, of course, and only included multiple choice questions. It was basic, but it was so much better than nothing that English assessment essentially continued in this form for more than two decades. From 1970-1998, I was President of ELS Language Centers. During this period, I observed the price of English tests rising, with little change in the product.

In the ???90s, TOEFL???s first competitor emerged, IELTS. The introduction of speaking and writing sections moved the industry forward and began to distinguish between those with a real command of the language, and those who simply mastered the ability to test well. There was, however, still a long way to go.

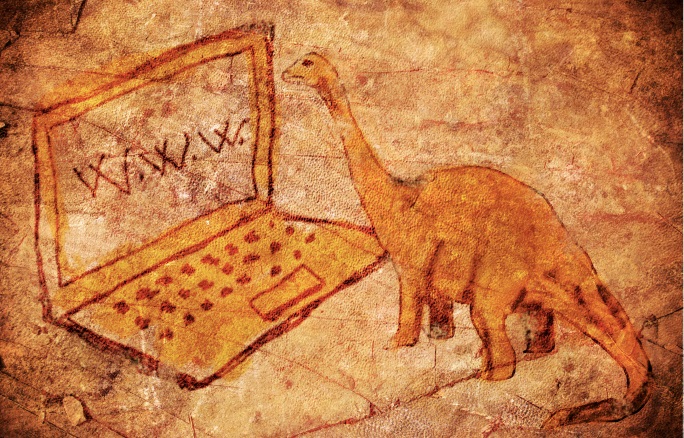

By 2000, the rise of the Internet was in full swing. Yet, English assessment had not taken advantage of this tool. Tests were still largely paper-based???TOEFL iBT (Internet-Based Test) wouldn???t launch until 2005???and as technology increased the speed of communication and business, the scheduling and grading of English proficiency tests remained incredibly slow and laborious. Students had to wait weeks or months for the next pre-scheduled test date, and institutions didn???t get the results for some 14 days.

Plus, TOEFL and IELTS were (and continue to be) incredibly long and expensive tests, lasting four hours and charging $200+. My frustration with this state of affairs???both on behalf of students and institutions???led to the initial conversations that eventually became iTEP.

When we launched in 2008, our competitors had a 44-year head start, but we had the advantage of being able to rethink English testing from the ground up. YouTube had launched a couple of years before, and on-demand entertainment was clearly the next trend for young people. So, we thought, what is the purpose of these pre-set test dates? Why can???t an English test be scheduled on-demand? We also figured, in the age of email, couldn’t we get results to institutions quicker? Indeed we could–we now offer 24-hour grading for English language programs.

Furthermore, we wondered, why does the exam have to last four hours? Extensive testing prior to our launch revealed that a 90-minute test was no less accurate than the standard half-day. We went on to address the price of English assessment–we???re still not sure why a test needs to cost more than $100, let alone $200+. We also took a new look at what the information results provide, ultimately using streaming technology to let institutions hear the speaking samples generated by their students or applicants.

In some respects, the rest of the English assessment industry has caught up. In others, it has not. We???re thrilled that more than 700 institutions share our vision for an English test designed to provide convenience, accessibility, and accuracy to test-takers and schools in 2016. Here???s to the next chapter of English assessment history.

Read this??article as it originally appeared on Perry Akins’ LinkedIn page.